Unveiling Secrets to AI Agents: Exploring the Interplay of Conversation Type, Self-Disclosure, and Privacy Insensitivity

Copyright ⓒ 2024 by the Korean Society for Journalism and Communication Studies

Abstract

This study investigated the dynamics of user interactions with AI agents, specifically delving into the impact of conversation types that users hold with an AI agent on self-disclosure and privacy insensitivity toward AI agents. The present study also examined the interplay of conversation types with attitudes toward the machine (i.e., perceived humanness and intimacy perception). Results exhibited that both functional and emotional conversations with AI agents were significantly associated with self-disclosure to AI agents. The more functional or emotional conversation a user made with AI agents, the more likely the user was to disclose his/her information to the devices. And, the impact of emotional conversation was found to be significantly greater than that of functional conversation. Yet, only emotional conversation was associated with AI privacy insensitivity. The more a user made emotional conversations with AI agents, the more likely the user was insensitive to privacy issues related to AI agents. Moreover, perceived humanness played a role in strengthening the relationship between functional conversation and self-disclosure, whereas emotional conversations with AI agents were more positively related to AI privacy insensitivity when users perceived the agents as human-like. Discussion and limitations were further addressed.

Keywords:

AI agents, self-disclosure, AI privacy insensitivity, conversation type, perceived humanness, intimacy perceptionArtificial intelligence (AI) is inching closer to our daily lives via technologies such as voice assistants (e.g., Siri, Google Assistant) and AI agents (e.g., Amazon Eco, Google Home, Apple Homepod). About 50% of U.S. consumers use voice search assisted by AI daily (Yancey, 2022). According to Cognitive Market Research (2024), From 2023 to 2030, the global AI speaker market is expected to expand at a compound annual growth rate of 26.50% from a value of USD 5812.5 million in 2023. In particular, AI agents enable individuals to do a wide range of daily activities, including listening to music, setting alarms, discovering recipes, managing smart home devices, making phone calls, and so on (CBS News, 2018; Hunter, 2022).

AI agents enhance user experience and convenience, allowing people to access and use technologies more interactively and coordinately (Katz, 2024; Sundar, 2020). However, concerns have simultaneously emerged about the massive but inconspicuous collection of personal information through human-AI interactions. Manheim and Kaplan (2019) warn that while AI tools provide convenience and benefits, they serve as “the engine behind Big Data Analytics and the Internet of Things,” which is designed to “capture personal information, create detailed behavioral profiles, and sell us goods and agendas” (p. 120). Consequently, “privacy, anonymity, and autonomy are the main casualties of AI’s ability to manipulate choices in economic and political decisions” (Manheim & Kaplan, 2019, p. 108).

Prior research on human-to-AI interactions has dedicated considerable attention to user privacy concerns (Dubois et al., 2020; Lau et al., 2018; Liao et al., 2019; Lutz & Newlands, 2021). These studies provide insights into user perceptions of AI agents and their privacy protection behaviors toward AI agents and other non-embodied virtual personal assistants (Guzman, 2019; Hermann, 2022; Lutz & Newlands, 2021). Lutz and Newlands (2021) suggest types of privacy concerns related to AI agents (e.g., social privacy, institutional privacy) and their influence on privacy protection behaviors (e.g., technical protection, data protection, social protection). Prior findings have predominantly focused on dispositional or contextual elements related to user privacy behaviors (e.g., AI-related trust and efficacy, previous experience, etc.).

However, two research voids exist concerning privacy-related behaviors. First, little attention has been given to how users interact with AI agents and disclose themselves to the machines. Recent studies (Ho et al., 2018; Meng & Dai, 2021) imply that the types of conversation users engage in influence their mental states and behaviors toward their counterparts. For instance, Meng and Dai (2021) showed that emotional support from a conversational partner (e.g., chatbot) could effectively reduce users’ stress and worry, which likely affects user privacy-related behaviors (Sprecher & Hendrick, 2004). Therefore, the present study aims to examine the effect of different conversation types that users have with AI agents on the users’ cognition (e.g., AI privacy insensitivity) and behaviors (e.g., self-disclosure).

Second, the distinctive attributes that AI agents possess in contrast to alternative media platforms have been overlooked. These qualities include human-like speech and interaction, and emotional sensing capabilities, which are referred to as anthropomorphism characteristics (e.g., Kim & Im, 2023). Users interact with AI agents through voice-based assistant systems (i.e., Alexa, Siri, Kim & Im, 2023; Pradhan et al., 2019), which simulates interaction patterns akin to those with human partners (Meng & Dai, 2021; Peter & Kühne, 2018). Previous studies (e.g., Cyr et al., 2009) have demonstrated that users are more receptive to machines with human-like characteristics. This suggests that AI agents with greater anthropomorphism would affect users’ perceptions of AI agents by interplaying with the types of conversation, generating different cognitive and behavioral outcomes related to privacy.

Therefore, this study aims to investigate the interplay of the two factors, user-initiated conversation patterns with an AI agent and attitudes toward the machine (i.e., perceived humanness and intimacy), which impact users’ privacy perceptions and interactions with the AI agent. The research is expected to address a knowledge deficit in the existing literature concerning human-to-AI interactions. Furthermore, the results of this research will help professionals in the AI sector understand crucial factors that impact users’ openness and reduce psychological resistance while using AI agents.

Self-disclosure and Privacy Insensitivity with AI Agents

Self-disclosure refers to communication in which individuals reveal themselves to others during an interaction (Green et al., 2006). The information shown may be descriptive or evaluative, involving thoughts, feelings, preferences, and goals (Ignatius & Kokkonen, 2007). In light of the general definition, this study conceives selfdisclosure in this context as a user revealing personal and private information through a transaction between the user and an AI agent. Research on self-disclosure with communication technologies suggests that disclosing oneself has psychological and relational benefits (Ho et al., 2018). Interacting with technology, particularly AI agents, would inevitably lead users to disclose personal information to the device, including taste, preference, lifestyle, and even emotional state. The reason for this, at least in part, is that, unlike other digital devices, AI agents give users an impression of a virtual human as the users use the machine by speaking as if they are conversing with someone. Once activated by command words (e.g., “Hey Google” or “Alexa”), agents act like intelligent experts who have answers to all the questions that users have. Further, the machines may also be viewed as non-judgmental, objective conversation agents as AI agents neither make judgments and opinions about users nor have motivations driven by self-interest (Lucas et al., 2014). All they do appears to respond to and assist the users. In this sense, AI agents could be trusted more than human partners. As suggested by the notion of computers-are-social-actors (CASA; Nass & Moon, 2000; Reeves & Nass, 1996), the enhanced human-to-machine interaction enables AI-operating assistive technologies to be anthropomorphized, leading users to socialize with and perceive the devices as social actors (Gambino et al., 2020). The anthropocentric approach prioritizes the consideration of humans. In the context of AI agents, this translates to a focus on the design and functionality of AI agents that align with human needs and behaviors. The anthropomorphism of AI agents and the influence of human-centered factors on user interactions with AI agents may lead to a lack of privacy awareness (Hermann, 2022; Kim & Im, 2023).

Meanwhile, AI devices powered by machine learning generally require massive data collection from users to improve the performance and service provided by the devices (Alpaydin, 2020). Given how AI devices function, privacy naturally becomes an issue for users (Cheng & Jiang, 2020). Research about privacy in the context of online and intelligent media points to specific mechanisms through which individuals become insensitive to their privacy, such as privacy fatigue causing emotional exhaustion and cynicism about privacy (Choi et al., 2018; Hinds et al., 2020) and privacy paradox leading to continued voluntary disclosure of personal information (Hallam & Zanella, 2017; Kokolakis, 2017). Despite the emergence of this phenomenon concerning media use, previous studies have failed to develop a concept that defines a state in which an individual is emotionally exhausted, skeptical, and eventually insensitive to privacy. While a wealth of research has studied privacy fatigue (Choi et al., 2018), privacy cynicism (Hoffmann et al., 2016; Lutz et al., 2020), or privacy disengagement (Carver et al., 1989), previous researchers have mainly viewed each as a separate concept and rarely explored the links between them (Choi et al., 2018; Hopstaken et al., 2015). Choi et al. (2018) illustrated that individuals who have felt higher fatigue or cynicism about managing their privacy are likely to give up protecting or addressing privacy leaks (Choi et al., 2018). As such, previous studies have focused on identifying the relationship between each concept and privacy behaviors. It is still difficult to explain an individual’s status according to recent events surrounding privacy only with each idea and their superficial relationship.

Given that privacy problems such as Google Home – a security error in early 2020 – have been covered via mass media in recent years, individuals can be “desensitized” to privacy leaks or threats, which are considered “normal and acceptable parts of life” (J. Li et al., 2023). Further, in a situation where individuals cannot properly manage and protect their privacy, regardless of their capacity to defend it, they would feel tired of investing much effort into privacy management and even give up protecting privacy with higher disengagement (Corradini, 2020). Considering these, individuals could become numbed to privacy threats in both emotional (e.g., privacy fatigue) and behavioral (e.g., disengagement) ways while enjoying assorted benefits of AI agents functionally or emotionally. Although users often advocate the value of privacy and exhibit concerns about a potential breach of their privacy, they tend to be less active in taking precautions and actions to protect their privacy. This gap between concerns and behaviors, known as the “privacy paradox” (Brown, 2001; Hallam & Zanella, 2017; Norberg et al., 2007; Sundar & Kim, 2019; Taddicken, 2014), speaks to the complicated nature of privacy when it comes to information and communication technologies.

Putting these discussions together, this study suggests a concept of privacy insensitivity to capture such a state adequately, relating to the notion of “lack of privacy awareness,” guided by privacy paradox (Bartsch & Dienlin, 2016; Hoffmann et al., 2016) and privacy-related behavior literature (e.g., Lutz et al., 2020). More precisely, privacy insensitivity reflects an individual’s attitude to becoming numb toward privacy threats and feeling exhausted and disengaged from protecting their private information. In doing so, the current study can define AI privacy insensitivity as an individual’s attitude to being careless about privacy threats in emotional and behavioral ways while interacting with an AI agent. Therefore, even though third parties could potentially access the information, a user insensitive to privacy is not likely to seriously consider sharing his or her phone number, school address, or other sensitive information with AI agents.

Following the anthropocentric approach (Hermann, 2022; Kim & Im, 2023), this study goes beyond the general pattern of privacy insensitivity by focusing on how users interact with AI agents that become more human-like as the primary source of users’ privacy insensitivity. In other words, AI agents have reached a level of sophistication where they can emulate human behaviors and interactions, making it challenging for users to discern whether they are interacting with a machine or a human. Users may let their guard down and pay less attention to privacy, not recognizing the potential for data collection and surveillance. Prior research supports that AI agents can blur the line between humans and machines (Lutz & Tamò-Larrieux, 2018, 2020). Given the human-like qualities of AI agents and the anthropocentric approach is becoming commonplace, it is meaningful to examine how the way users interact with the AI agents has to do with how much attention they pay to privacy.

Self-disclosure during User-AI Agent Interaction

Users may feel comfortable disclosing privacy when using AI devices. Lucas et al. (2014) indicate that users tend to have less concern about their public image after exposing their inner selves to the machine. The perception among users that chatbots do not develop opinions on their own seems to diminish their reluctance to disclose personal information. The same can apply to AI agents that share similar characteristics of chatbots. AI agents are viewed as non-judgmental, just like chatbots, and their interaction with users is much more human-like. Chatbots are text-based conversational agents powered by automated writing programs (Dörr, 2016). Similarly, AI agents are natural language processing (NLP) applications and, as such, are designed to work with and process textual data like chatbots. NLP, also known as text mining, can automatically process and analyze unstructured text, such as extracting information of interest and displaying it in a structured format suitable for computational analysis or applying transformations such as summary or translation to make text easier to digest to human readers (Chen et al., 2020).

What makes the AI agents different from the chatbot is the inclusion of automatic speech recognition for input, which transforms spoken commands into textual data that can be processed by the NLP dialogue management system and text-to-speech synthesis for output, which transforms machine-generated textual data into spoken output (Gunkel, 2020). Therefore, AI agents equipped with the NLP and anthropomorphic features (e.g., a human-like name, informal language style; Ramadan et al., 2022) could interpret users’ verbal expressions faster and more accurately and respond through computer-generated voices in a human-like way. Given the different forms of interaction, the usermachine interaction for AI agents will resemble human-to-human conversation1 more closely and thus be richer. Technological advantages from AI agents may translate into increased user privacy disclosure during usage.

Beyond this general expectation, the current study takes a step further, investigating a more nuanced possibility that the degree of selfdisclosure to and privacy insensitivity toward AI agents would differ depending on different types of user-to-AI interactions. Interactions held between AI agents and users can be categorized into two main types: functional and emotional conversations (Lee & Kim, 2019; Lopatovska et al., 2019; Sundar et al., 2024). A functional conversation is a form of dialogue that allows users to operate assorted functions or specific tasks mounted on the agents. For example, through functional conversations, AI agent users usually perform tasks linked to some level of human intelligence (Broussard, 2018) when they need to listen to music, set alarms, check weather information, or navigate their destination. By contrast, an emotional conversation occurs when users simply like to communicate with the agents. Users turn to an AI agent and use its communication and empathy/responsiveness functions depending on their situation, mood, or emotional state. During the emotional conversation, the AI agent is likely treated as a conversation partner (Gehl & Bakardjieva, 2017). An emotional conversation may not be what AI agents are initially designed for, but it shows a possibility to go beyond the common definition of AI (Guzman & Lewis, 2020), which focuses on the pragmatic and task-oriented aims of the technology. Although not an AI agent, Replika, a personal chatbot developed by Eugenia Kuyda, was designed to serve as a friend to users, i.e., “digital companion with whom to celebrate victories, lament failures, and trade weird Internet memes” (Pardes, 2018). This emotional bot implies that there could be a user’s need to have a caring chatbot capable of chatting or talking more in the emotional domain, just beyond completing asked tasks. Indeed, a report revealed that people have emotional conversations such as “I am tired” or “How are you feeling” with AI agents (Kang, 2019).

A primary feature of emotional conversation with AI agents, as opposed to functional conversation, is that it allows users to express and deal with their emotions at the time. Psychological and emotional bonding (i.e., attachment) can even take the form of companionship, (perceived) friendship, or love (e.g., Hernandez-Ortega & Ferreira, 2021; Ki et al., 2020; Ramadan et al., 2022). As such, research has long suggested that expressing emotions has various positive effects (Pennebaker, 1997). If individuals share their emotions, especially negative ones, with others, they can relieve the distress of negative feelings (Meng & Dai, 2021; Pennebaker, 1997). Given the benefits of sharing emotions, individuals feel inclined to disclose their positive or negative emotions to someone around them (Laurenceau et al., 1998; Meng & Dai, 2021). Users are aware that an AI agent they have is readily available to listen to and respond to whatever they say to the conversational agent. Emotional conversation with AI agents is thus likely to occur, especially when users feel they need to vent their emotions but their AI agent is the only appropriate partner to speak with about the emotions at the moment. Once started, the emotional conversation is expected to contain more personal and private information about the user than functional conversation (Sprecher & Hendrick, 2004).

Taken together, AI agents’ enhanced interactivity mimicking human-to-human conversation would likely lead users to engage with the device and behave during the interaction. As the notion of CASA (Reeves & Nass, 1996) suggests, such user-to-AI interaction likely encourages users to disclose themselves to the machine as naturally as they do with human partners (Ho et al., 2018). Thus, this study expects that interacting with AI agents in general (functional and emotional conversations alike) increases self-disclosure and privacy insensitivity toward the AI agents. However, given the distinctive characteristics of two types of conversation (functional versus emotional), this study expects more self-disclosure and privacy insensitivity by users during emotional conversation. Our expectations are summarized in the following hypotheses: H1: Functional conversation with an AI agent will be significantly associated with users’ a) self-disclosure to the device and b) AI privacy insensitivity even after controlling for emotional conversation; H2: Emotional conversation with an AI agent will be significantly associated with users’ a) self-disclosure to the device and b) AI privacy insensitivity even after controlling for functional conversation; H3: Emotional conversation with an AI agent will be more strongly associated with users’ self-disclosure to the device and b) AI privacy insensitivity than functional conversation.

Moderation Effects by Perceived Humanness and Intimacy

Anthropomorphism, defined as “the assignment of human traits or qualities such as mental abilities” (Kennedy, 1992, p. 3), provides a unifying framework for understanding the diverse manifestations of attributing human characteristics to non-human entities. Nowak and Biocca (2003) further describe anthropomorphism as determining others’ humanity, intelligence, and social potential. Under this umbrella concept, perceived humanness has been conceptualized as an individual’s perception of how much technology has a human form or characteristics (Lankton et al., 2015). In line with this, the current study defines perceived humanness as the extent to which individuals perceive AI agents as human-like, with the perception being a product of the interplay between user psychology and technological attributes.

Social presence theory and social response theory can explain the influence of technological attributes on perceived humanness. Social presence theory posits that characteristics of a technology influence whether it is perceived as being more sociable and personal (Short et al., 1976), with the salience of “social presence” shaping how individuals use or connect with the technology. Similarly, social response theory suggests that individuals tend to “socially” respond to technology with high social presence as though it were human (Gefen & Straub, 1997; Nass et al., 1994). Guzman and Lewis (2020) note that human-like cues within technology elicit social responses from users even when the users are not confused to believe the technology is a human counterpart.

When viewed from this perspective, AI agents possess various features that translate into social presence, leading users to perceive them as human-like. For example, AI agents take turns conversing with a voice similar to a human voice, and using gender as anthropomorphic cues on AI agents contributes to humanoid embodiment (Eyssel & Hegel, 2012). The interactivity may increase user perception of social presence, predisposing users to personify AI agents (Purington et al., 2017). As interfaces increasingly mimic human traits, they facilitate more natural social responses from users (Nass & Moon, 2000). The increasing prevalence of anthropomorphic design in technology indicates a shift in social dynamics, with the social etiquette and behavioral norms typically observed in human-to-human interactions now being applied to human-computer relationships.

This shift in social dynamics can have significant implications for user behavior during interactions with AI agents, particularly in the context of conversation types. During functional conversations, users engage with AI agents to complete specific tasks or operate various functions. The CASA paradigm (Nass et al., 1994) provides a framework for understanding how perceived humanness can moderate the relationship between functional conversation and self-disclosure. Users may not typically feel compelled to self-disclose in functional conversations, where the primary focus is task completion or information exchange. However, when the AI agent is perceived as more humanlike, users may apply social norms of reciprocity and trust, leading to increased self-disclosure even in these task-oriented interactions. Consequently, when perceived humanness is high, users may be more inclined to self-disclose during functional conversations with AI agents, as they unconsciously or consciously treat the interaction as more social and personal. Similarly, the AI agent’s perceived humanness can also significantly influence the extent and depth of self-disclosure during emotional conversations. This moderation effect can be explained by social presence (Short et al., 1976). When AI agents possess human-like features and exhibit high social presence, users are more likely to perceive them as warm, empathetic, and trustworthy.

In addition, the degree to which users perceive an AI agent as human-like can significantly shape the relationship between functional conversation and AI privacy insensitivity. Users may develop a stronger familiarity and rapport with the agent when an AI agent is imbued with more human-like qualities. The moderation effect of perceived humanness on the relationship between functional conversation and AI privacy insensitivity can be understood through trust formation in human-computer interaction. This trust, in turn, can reduce users’ privacy apprehensions and increase their propensity to disclose personal details during functional conversations, even if they are generally less sensitive to privacy concerns. The theory of anthropomorphism supports the moderating role of perceived humanness in the relationship between emotional conversation and AI privacy insensitivity, suggesting that the perceived humanness of the AI agent can influence this relationship. When users perceive AI agents as more human-like, they may ascribe humanlike attributes such as empathy, compassion, and emotional intelligence to the agent. These attributions can lead to increased trust and emotional connection with the AI agent, which may reduce privacy concerns and increase the likelihood of sharing intimate information during emotional conversations.

In line with this reasoning, this study expects that humanness perception will moderate the relationships hypothesized earlier between conversations with AI agents and self-disclosure/ AI privacy insensitivity. It is likely that interacting with AI agents, whether functionally or emotionally, is pertinent to more self-disclosure and less concern about privacy for those who perceive their AI agent as human. Building on this rationale, this study proposes the following hypotheses: H4: The relationship between conversation with AI agents (both functional and emotional) and a) users’ self-disclosure and b) AI privacy insensitivity will be stronger for those with high levels of humanness perception of AI agents.

Similar to humanness perception, intimacy, once established, is expected to moderate the effects of having conversations with AI agents on selfdisclosure and AI privacy insensitivity. According to Social penetration theory, interpersonal communication evolves from shallow levels to deeper ones as communication partners reveal personal information to each other (Altman & Taylor, 1973). Relationship development and intimacy occur through self-disclosure (Derlega et al., 2008). In this sense, intimacy is defined as a quality of interactions between persons (e.g., Patterson, 1982). A similar process may occur between AI agents and users. The human-like attributes of AI agents can make it possible for users to develop a sense of intimacy with the machine (Purington et al., 2017). Although selfdisclosure is inherently one-way from the user to an AI agent, an intimate relationship may be established in the user’s mind as interactions with the voice assistant system equipped with a name, gender, character, and even personality continue. Once the sense of intimacy is formed, it likely has implications not only for the frequency of AI agent use but also for the nature of it. With the intimate mindset activated, the user will feel more comfortable revealing self and personal information to the AI agent while having functional or emotional conversations with the machine. That indicates the possibility that intimacy moderates the impact of conversation with AI agents on self-disclosure and AI privacy insensitivity. The user’s interaction with his or her AI agent would pertain to more self-disclosure and higher AI privacy insensitivity when the sense of intimacy is high. Based on this rationale, this study proposes the intimacy-based moderation hypotheses as follows: H5: The relationship between conversation with AI agents (both functional and emotional) and a) users’ selfdisclosure and b) AI privacy insensitivity will be stronger for those with higher intimacy perception toward AI agents.

METHOD

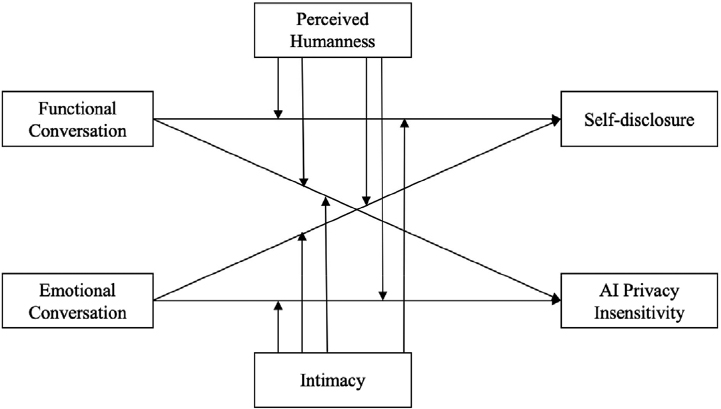

This study aimed to investigate the impact of conversation type (functional and emotional), perceived humanness, and intimacy on users’ self-disclosure and AI privacy insensitivity to AI agents. Figure 1 shows a conceptual framework of this research. An online survey was conducted to examine suggested hypotheses.

Sampling Procedures

A total of 650 participants were recruited from a professional research company in Korea, Macromill Embrain. Quota sampling was used according to age (14-19 7.8%; 20-29 17.6%; 30- 39 18.4%; 40-49 20.8%; 50-59 21.2%; 60-69 14.2%) and gender (male 50.1%; female 49.9%) proportions of Korea. An invitation email was randomly sent to an online panel of Macromill Embrain until the required proportions of age and gender groups were reached. Once participants agreed to participate in the study, they were directed to a questionnaire and asked to answer questions about their AI agent usage. The survey took approximately 20-25 minutes. After excluding participants with missing values, a total of 627 participants were used for the final analysis (male 50.6%; average age = 41.00, range 14-68). Table 1 shows the sample profile of this study.

AI Agent Usage in General and Control Variables

Participants were asked about the length of AI agent usage: less than one month, 1 to 3 months, 3 to 6 months, 6 months to 1 year, 1 to 2 years, and more than 2 years. The frequency of AI agent usage was asked with the following choices: once a week, 2-3 days a week, once a day, and several times a day. Adopted from Malhotra et al. (2004) and modified, perceived risk of AI agents was measured with four items on a 5-point Likert scale (1 = Strongly disagree; 5 = Strongly agree; Cronbach’s α = .69, M = 3.51, SD = 0.63): “In general, it would be risky to give personal information to AI agents,” “There would be high potential for loss associated with giving personal information to AI agents,” “Providing AI agents with personal information would involve many unexpected problems,” and “I would feel safe giving personal information to AI agents (reversecoded).” To measure privacy concerns, eight items were asked on 5-point Likert scale (1 = Strongly disagree; 5 = Strongly agree; modified from Malhotra et al., 2004; Cronbach’s α = .92, M = 3.66, SD = 0.70): “I am concerned about others’ obtaining my personal information via my online behaviors,” “I am concerned about my personal information left in my previous digital devices (e.g., laptops, cellphones),” “I am concerned about my personal information left online,” “I am concerned about online companies asking too much information when I sign in the websites,” “I’m concerned about my online IDs stolen,” “I’m concerned with online privacy issues,” “I’m doubtful for those who don’t disclose themselves online,” and “I’m concerned about my personal information (e.g., photos, name) stolen.”

Types of Conversations with AI Agents

Functional conversation and emotional conversation were adopted from Broussard (2018), Gehl and Bakardjieva (2017), Lee and Kim (2019), and Lopatovska et al. (2019) and modified to AI agent usage situations. Functional conversation was assessed by asking the extent to which they made conversations such as “What is the day today?” “What is the date today?” “How is the weather today?” “How much do you think it’s raining?” “Please recommend fresh foods,” “Which clothes do you think are good for me today?” and “Please recommend good restaurants.” A total of seven situations were asked on a 5-point Likert scale (1 = Not at all; 5 = Very often; Cronbach’s α = .86, M = 3.26, SD = 0.82). Similarly, emotional conversation was measured by asking the extent to which participants made conversations such as “I’m bored,” “I’m depressed,” “I love you,” “Let’s play with me,” “Did you sleep well last night?” “Have a good night,” “I’m hungry,” “Who do you think is the most beautiful (good-looking) in the world?” “It’s my birthday today,” “Would you marry me?” “Get out of here,” “It’s annoying,” “Such a fool!” and “Shut up!” A total of fourteen situations were asked on a 5-point Likert scale (1 = Not at all; 5 = Very often; Cronbach’s α = .95, M = 2.31, SD = 0.90). Considering the disparity in the number of items used to measure each conversation type (seven for functional and fourteen for emotional), utilizing the sum of these items can result in varying measurement units for different conversation types. Therefore, we used the mean score as an index to account for the variance in each conversation type.

Psychological Response to AI Agents

Participants were inquired about perceived humanness of AI agents with six items on a 5-point Likert scale (1 = Strongly disagree; 5 = Strongly agree; Berscheid et al., 1989; Cronbach’s α = .95, M = 2.61, SD = 0.99): While using AI agents, “I sometimes feel as if the AI agent is my friend,” “I sometimes feel as if the AI agent is a human being,” “I sometimes feel as if the AI agent is my family,” “I sometimes forget that the AI agent is a machine,” “I sometimes feel that the AI agent is a good partner to talk with,” and “I sometimes get comforted emotionally from the AI agent.” Adopted and modified from Snell et al. (1988), intimacy was measured with nine items on a 5-point Likert scale (1 = Strongly disagree; 5 = Strongly agree; Cronbach’s α = .94, M = 2.59, SD = 0.93): From time to time, “I share my worries with AI agents,” “I pour out my troubles to AI agents,” “I relieve my stress by using AI agents,” “I complain one thing and another to AI agents,” “I enjoy talking with AI agents,” “I feel comfortable about talking with AI agents,” “I feel relieved after sharing my concerns or issues to AI agents,” “I would like to keep talking with AI agents,” and “I feel emotionally connected to AI agents.”

Privacy-related Perception and Behavior toward AI Agents

Self-disclosure to AI agents was adopted from Tsay-Vogel et al. (2018) and modified to AI agent usage situations. A total of nine items were asked on a 5-point Likert scale (1 = Strongly disagree; 5 = Strongly agree; Cronbach’s α = .96, M = 2.37, SD = 1.03): “I freely share my personal information with AI agents,” “I freely talk my daily life to AI agents,” “I freely share my concerns with AI agents,” “I give my name to AI agents,” “I give my career to AI agents,” “I give my age to AI agents, “I share my health conditions with AI agents,” “I share my interests with AI agents,” and “I express my political orientation to AI agents.” Adopted from Cho et al. (2010), AI privacy insensitivity was assessed with two items on a 5-point Likert scale (1 = Strongly disagree; 5 = Strongly agree; r = .88, M = 2.25, SD = 0.97): “I am not too concerned about privacy invasion when using AI agents” and “I am not worried much about my personal information being partially compromised while using AI agents.”

RESULTS

A series of regression analyses was conducted to examine the suggested hypotheses. The first model consisted of control variables and types of conversations that respondents had with AI agents: perceived risk of AI agents, privacy concerns, gender, and functional/ emotional conversation. The second model of variables included the two moderators: perceived humanness and intimacy. The third model was comprised of interaction effects of functional conversations and the two moderators (i.e., functional conversation and perceived humanness, functional conversation and intimacy). In the fourth model, instead of the interaction effects of functional conversations and the two moderators, those of emotional conversations and the two moderators (i.e., emotional conversation and perceived humanness and emotional conversation and intimacy) were added. The final block took in all four interactions.

Self-Disclosure

Model 1 represented the impact of control variables and conversation types on users’ level of self-disclosure to AI agents. Results showed a significant negative relationship between perceived risk and self-disclosure (β = -.06, p = .020), which indicates that the higher risk a user feels about providing his/her information, the less likely the user discloses him-/herself to AI agents. Meanwhile, no significant relationship was found between privacy concerns (p = .291) and gender (p = .554). Both functional (β = .21, p < .001) and emotional (β = .71, p < .001) conversations were found to be significantly related to self-disclosure: the more functional or emotional conversation a user makes with AI agents, the more likely the user discloses his/her information to the devices. Hence, H1a and H2a were supported. To test whether the two coefficients of functional and emotional conversations were statistically different, an equality test (i.e., an incremental F-test) was conducted by imposing constraints in the regression model. Results showed that the F-value was 96.90, which is significant. That is, the impact of emotional conversation was found to be significantly greater than that of functional conversation. Therefore, H3a was also supported. Model 2 additionally introduced two moderators and found significant positive impacts of perceived humanness (β = .24, p < .001) and intimacy (β = .31, p < .001), respectively, on selfdisclosure. The more human and intimate a user feels toward AI agents, the more likely the user shares personal information with the agents. In Model 3, interactions between functional conversation and the two moderators (i.e., perceived humanness, intimacy) were added. Results showed that above and beyond all impacts of control variables, types of conversation, perceived humanness, and intimacy, the interaction between functional conversation and perceived humanness was significant (β = .08, p = .035). This finding suggests that the relationship between functional conversation and selfdisclosure is greater for users who have a stronger perception of AI agents’ humanness. However, there was no significant interaction effect of functional conversation and intimacy (p = .686). Meanwhile, in Model 4, interactions between emotional conversation and the two moderators were examined after controlling the other variables in Models 1 and 2. Findings showed no significant interaction between emotional conversation and each of the two moderators, i.e., perceived humanness (p = .350) and intimacy (p = .880). All four interactions were included in Model 5. Results revealed that the interaction between functional conversation and perceived humanness was marginally significant (β = .08, p = .055) and the other three interactions were not significant (functional conversation and intimacy p = .490; emotional conversation perceived humanness p = .951; emotional conversation and intimacy p = .648). Therefore, H4a was partially supported and H5a was not supported.

AI Privacy Insensitivity

Model 1 found all three control variables – perceived risk (β = -.19, p < .001), privacy concerns (β = -.13, p = .001), and gender (β = -.07, p = .035) – were negatively related to AI privacy insensitivity. The more risk a user perceives about interacting with AI agents, the more sensitive the user is to AI agent-related privacy. In a similar vein, the more concern a user has about privacy in general, the more sensitive the user is to AI agent-related privacy. Also, female users are likely to feel more sensitive to AI agent-related privacy than male users. Unlike the findings about selfdisclosure, only emotional conversation was positively related to AI privacy insensitivity (β = .40, p < .001), whereas functional conversation was not (p = .745). The more a user makes emotional conversation with AI agents, the more likely the user is insensitive to privacy issues related to AI agents. Thus, H1b was not supported, yet H2b and H3b were supported. In Model 2 where the two moderators, perceived humanness and intimacy, were added, results indicated that while perceived humanness was significantly associated with AI privacy insensitivity (β = .27, p < .001), intimacy was not (p = .127). The higher level of perceived humanness users, the higher the users’ insensitivity regarding privacy issues of AI agents. Model 3 introduced the interaction effects of functional conversation and the two moderators (i.e., perceived humanness, intimacy). While perceived humanness was found to significantly moderate the relationship between functional conversation and AI privacy insensitivity (β = .15, p = .054), intimacy was not (p = .416). In Model 4, instead of the interactions involving functional conversations, those of emotional conversations and the two moderators were included. Both perceived humanness (β = .33, p < .001) and intimacy (β = -.17, p = .027) were found to enhance the relationship between emotional conversation and AI privacy insensitivity. In the final Model 5, all interactions were entered. Results found that the interaction effects of (1) functional conversation and perceived humanness (p = .949) and (2) emotional conversation and intimacy disappeared (p = .112), whereas the interaction of emotional conversation and perceived humanness remained significant (β = .33, p < .001). Therefore, given that only emotional conversation had a significant interaction effect, H4b was partially supported. Yet, H5b was not supported. Tables 2, 3, and 4 show detailed results of two hierarchical regression analyses for self-disclosure and AI privacy insensitivity, respectively.

The Impact of Conversation Type on Self-disclosure: The Moderating Role of Perceived Humanness and Intimacy

DISCUSSION

The first set of findings is that both functional and emotional conversations with AI agents are significantly associated with self-disclosure to AI agents. Yet, only emotional conversation was found to have a significant relationship with AI privacy insensitivity. When comparing the two types of conversation, emotional conversation, as opposed to functional conversation, was a significantly stronger factor associated with both self-disclosure and AI privacy insensitivity. These findings suggest that emotional conversations with AI agents are generally more candid and revealing, which results in the potential risk of privacy invasion by disclosing sensitive information combined with emotions, moods, and sentiments. In all, the results show that the type of conversation in the context of hyperpersonal communication matters for selfdisclosure and AI privacy insensitivity. The finding also contributes to the body of knowledge in human-computer interaction (HCI) and psychology. It provides empirical evidence that emotional conversations with AI agents can lead to greater self-disclosure and reduced privacy concerns related to AI agents. This is a significant discovery for understanding the dynamics of human-AI relationships. Further research could be conducted on the psychological mechanisms behind this phenomenon and the development of theories around trust and relationship-building with non-human entities.

Our findings also revealed that the relationship between types of conversation and selfdisclosure/ AI privacy insensitivity varies depending on user attitudes toward AI agents. Perceived humanness positively moderates the relationship between functional conversation and self-disclosure, suggesting that AI agent users tend to disclose themselves, particularly when they perceive AI agents as human beings. The fundamental assumption made by the CASA framework is that the disclosure process and outcomes would be similar, regardless of whether the partner is a person or a machine (Krämer et al., 2012). Given the result, it seems that selfdisclosure through functional conversation requires the condition of humanness perception toward a conversational partner. In contrast, the relationship between emotional conversation and self-disclosure was not moderated by perceived humanness. This may indicate that AI agent users who engage in an emotional conversation with the agent would already feel and treat the agent as a human counterpart. The users may share personal thoughts, feelings, and experiences to obtain emotional, relational, and psychological benefits, as suggested by the Perceived Understanding framework (Reis et al., 2017). The findings indicate that the CASA framework could be expanded to include the conditions under which individuals are more likely to self-disclose to AI agents. In particular, the importance of considering the interaction style (i.e., conversation type) and the perceived humanness of the AI agent as critical factors in facilitating self-disclosure is highlighted. On the other hand, the practical implications of the findings are significant, particularly in designing AI agents that interact with users in a way that is both effective and respectful of their privacy. The fact that perceived humanness can increase self-disclosure during functional conversations indicates that users are more likely to share personal information with AI agents that they feel are more like humans.

Another finding is that emotional conversations with AI agents lead to privacy insensitivity when users feel the agents like humans. This finding highlights the role of emotional conversation in privacy insensitivity during social interaction with AI agents. At the same time, this result points to the most susceptible conditions (i.e., higher emotional conversation coupled with greater perceived humanness) for AI agent users to become insensitive to their privacy. This may be because perceived humanness that could increase familiarity with AI agents and trust in AI agents is likely to generate the belief that shared information with the AI agents would be kept confidential. The finding contributes to the understanding of the dynamics of human-AI interaction. It suggests that when AI agents are perceived as more human-like, users may engage in emotionally charged conversations, which can lead to a decrease in AI privacy concerns. This is particularly relevant in the context of mental health care, where AI-based conversational agents are used to promote well-being. H. Li et al., (2023) highlighted the effectiveness of AI-based conversational agents in reducing symptoms of depression and distress, emphasizing the importance of the quality of human-AI therapeutic relationships.

Although not hypothesized, it is noteworthy that users’ general privacy concern is associated with a decrease in AI privacy insensitivity (i.e., an increase in sensitivity). Yet, as observed in this study, emotional conversation spurs AI privacy insensitivity, and this pattern is even more pronounced for those with a higher level of perceived humanness toward AI agents. On the one hand, users’ privacy concerns may encourage AI privacy sensitivity. On the other hand, as individuals use and engage in emotional conversations with AI agents, their AI privacy sensitivity appears to erode for those with high levels of perceived humanness. This result raises the need to scrutinize the relationship between types of conversation with AI agents and users’ privacy paradox. It would be reasonable for a future study to investigate how AI privacy insensitivity increases as types of conversations with AI agents. Furthermore, research on the potential for secret collusion among generative AI agents has prompted concerns about the potential for privacy and security challenges associated with the unauthorized sharing of information or other forms of unwanted agent coordination. This highlights the need for continuous monitoring and the development of mitigation measures to protect user privacy in interactions with AI agents.

Limitations and Suggested Future Research

There are several limitations in this study. First, the current study included variables such as emotional conversations, self-disclosure, and AI privacy insensitivity, which are quite sensitive and thus challenging to measure accurately based on self-reports. Social desirability may have affected the measurement of these variables and the observed results (Taddicken, 2014). Although these variables gathered by self-reports have merits in setting out a novel response within the privacy domain of AI agents and helping design AI-mediated interactions accompanied by selfdisclosure, it will be fruitful if these variables are measured in a more discreet, natural manner. Second, the present study only focused on selfdisclosure and privacy insensitivity regarding AI agents. Given that voice assistants such as ‘Siri’ on mobile phones and AI agents share very similar technical and communication features, it is worthwhile for a future study to investigate similarities and differences between agents and other kinds of voice assistants for the sake of generalizability. Third, this study did not examine the relationship between self-disclosure and AI privacy insensitivity. Although it is plausible to expect one to cause the other, the two were treated as parallel outcomes. This is mainly because the present study is based on cross-sectional data. It would be interesting to study whether and how self-disclosure causes AI privacy insensitivity or vice versa. Further, privacy concerns in general and perceived risk used as covariates in this study merit consideration with those two dependent variables (self-disclosure and AI privacy insensitivity). Testing the causality among the variables would shed light on the issue of privacy paradox. As a related note, given the use of crosssectional data, this study acknowledges that the interpretation of the causal relationships should be read with caution. Lastly, future research could explore alternative explanations for increased self-disclosure to AI agents. For instance, the machine heuristic perspective suggests that people may disclose more information to AI agents not because they perceive them as humanlike, but rather due to AI-specific perceptions of trustworthiness and data security (Madhavan et al., 2006; Sundar & Nass, 2000). Investigating the role of these machine-specific heuristics in driving self-disclosure, alongside the influence of anthropomorphism features, could provide a more comprehensive understanding of the mechanisms underlying user behavior in human- AI interactions.

In conclusion, the type of conversation— functional or emotional—plays a significant role in how users interact with AI agents. This affects both self-disclosure and AI privacy insensitivity. This has important implications for future research directions in academia and the ethical design and use of AI agents in various industries.

Acknowledgments

This research was supported by the Yonsei University Research Fund of 2024 (2024-22-0097) and the Yonsei Signature Research Cluster Program of 2024 (2024-22-0168).

Disclosure Statement

No potential conflict of interest was reported by the author.

References

- Alpaydin, E. (2020). Introduction to machine learning. MIT Press.

- Altman, L., & Taylor, D. A. (1973). Social penetration: The development of interpersonal relationships. Holt, Rinehart and Winston.

-

Bartsch, M., & Dienlin, T. (2016). Control your Facebook: An analysis of online privacy literacy. Computers in Human Behavior, 56, 147–154.

[https://doi.org/10.1016/j.chb.2015.11.022]

-

Berscheid, E., Snyder, M., & Omoto, A. M. (1989). The relationship closeness inventory: Assessing the closeness of interpersonal relationships. Journal of Personality and Social Psychology, 57(5), 792–807.

[https://doi.org/10.1037/0022-3514.57.5.792]

- Broussard, M. (2018). Artificial unintelligence: How computers misunderstand the world. MIT Press.

- Brown, B. (2001). Studying the internet experience. Hewlett-Packard Company. http://shiftleft.com/mirrors/www.hpl. hp.com/techreports/2001/HPL-2001-49.pdf

-

Carver, C. S., Scheier, M. F., & Weintraub, J. K. (1989). Assessing coping strategies: A theoretically based approach. Journal of Personality and Social Psychology, 56(2), 267–283.

[https://doi.org/10.1037/0022-3514.56.2.267]

- CBS News. (2018, October 9). How will digital assistants like Alexa or Siri change us? https://www.cbsnews.com/news/how-will-digital-assistants-like-alexa-siri-google-change-us/

-

Chen, Q., Leaman, R., Allot, A., Luo, L., Wei, C.-H., Yan, S., & Lu, Z. (2020). Artificial Intelligence (AI) in action: Addressing the COVID-19 pandemic with Natural Language Processing (NLP). arXiv.

[https://doi.org/10.48550/arXiv.2010.16413]

-

Cheng, Y., & Jiang, H. (2020). How do AI-driven chatbots impact user experience? Examining gratifications, perceived privacy risk, satisfaction, loyalty, and continued use. Journal of Broadcasting and Electronic Media, 64(4), 592–614.

[https://doi.org/10.1080/08838151.2020.1834296]

-

Cho, H., Lee, J.-S., & Chung, S. (2010). Optimistic bias about online privacy risks: Testing the moderating effects of perceived controllability and prior experience. Computers in Human Behavior, 26(5), 987–995.

[https://doi.org/10.1016/j.chb.2010.02.012]

-

Choi, H., Park, J., & Jung, Y. (2018). The role of privacy fatigue in online privacy behavior. Computers in Human Behavior, 81, 42–51.

[https://doi.org/10.1016/j.chb.2017.12.001]

- Cognitive Market Research. (2024). AI artificial intelligence speaker market report 2024 (Global edition). https://www.cognitivemarketresearch.com/ ai-artificial-intelligence-speaker-market-report

- Corradini, I. (2020). Building a cybersecurity culture in organizations (Vol. 284). Springer International Publishing.

-

Cyr, D., Head, M., & Pan, B. (2009). Exploring human images in website design: A multi-method approach. MIS Quarterly, 33(3), 539–536.

[https://doi.org/10.2307/20650308]

-

Derlega, V. J., Winstead, B. A., Mathews, A., & Braitman, A. L. (2008). Why does someone reveal highly personal information? Attributions for and against self-disclosure in close relationships. Communication Research Reports, 25(2), 115–130.

[https://doi.org/10.1080/08824090802021756]

-

Dörr, K. N. (2016). Mapping the field of algorithmic journalism. Digital Journalism, 4(6), 700–722.

[https://doi.org/10.1080/21670811.2015.1096748]

-

Dubois, D. J., Kolcun, R., Mandalari, A. M., Paracha, M. T., Choffnes, D., & Haddadi, H. (2020). When agents are all ears: Characterizing misactivations of IoT smart speakers. Proceedings on Privacy Enhancing Technologies, 2020(4), 255–276.

[https://doi.org/10.2478/popets-2020-0072]

-

Eyssel, F., & Hegel, F. (2012). (S)he’s got the look: Gender stereotyping of robots. Journal of Applied Social Psychology, 42(9), 2213–2230.

[https://doi.org/10.1111/j.1559-1816.2012.00937.x]

-

Gambino, A., Fox, J., & Ratan, R. A. (2020). Building a stronger CASA: Extending the computers are social actors paradigm. Human- Machine Communication, 1(1), 71–85.

[https://doi.org/10.30658/hmc.1.5]

-

Gefen, D., & Straub, D. W. (1997). Gender differences in the perception and use of e-mail: An extension to the technology acceptance model. MIS Quarterly, 21(4), 389–400.

[https://doi.org/10.2307/249720]

- Gehl, R. W., & Bakardjieva, M. (2017) Socialbots and their friends: Digital media and the automation of sociality. Routledge.

- Green, K., Derelega, V. J., & Matthews, A. (2006). Self-disclosure in personal relationships. In A. L. Vangelisti & D. Perlman (Eds.), The Cambridge handbook of personal relationships (pp. 409–427). Cambridge University Press.

- Gunkel, D. J. (2020). An introduction to communication and artificial intelligence. John Wiley & Sons.

-

Guzman, A. L. (2019). Voices in and of the machine: Source orientation toward mobile virtual assistants. Computers in Human Behavior, 90, 343–350.

[https://doi.org/10.1016/j.chb.2018.08.009]

-

Guzman, A. L., & Lewis, S. C. (2020). Artificial intelligence and communication: A human-machine communication research agenda. New Media and Society, 22(1), 70–86.

[https://doi.org/10.1177/1461444819858691]

-

Hallam, C., & Zanella, G. (2017). Online self-disclosure: The privacy paradox explained as a temporally discounted balance between concerns and rewards. Computers in Human Behavior, 68, 217–227.

[https://doi.org/10.1016/j.chb.2016.11.033]

-

Hermann, E. (2022). Anthropomorphized artificial intelligence, attachment, and consumer behavior. Marketing Letters, 33(1), 157–162.

[https://doi.org/10.1007/s11002-021-09587-3]

-

Hernandez-Ortega, B., & Ferreira, I. (2021). How smart experiences build service loyalty: The importance of consumer love for smart voice assistants. Psychology & Marketing, 38(7), 1122–1139.

[https://doi.org/10.1002/mar.21497]

-

Hinds, J., Williams, E. J., & Joinson, A. N. (2020). “It wouldn’t happen to me”: Privacy concerns and perspectives following the Cambridge Analytica scandal. International Journal of Human-Computer Studies, 143, 102498.

[https://doi.org/10.1016/j.ijhcs.2020.102498]

-

Ho, A., Hancock, J., & Miner, A. S. (2018). Psychological, relational, and emotional effects of self-disclosure after conversations with a chatbot. Journal of Communication, 68(4), 712–733.

[https://doi.org/10.1093/joc/jqy026]

-

Hoffmann, C. P., Lutz, C., & Ranzini, G. (2016). Privacy cynicism: A new approach to the privacy paradox. Cyberpsychology: Journal of Psychosocial Research on Cyberspace, 10(4), 7.

[https://doi.org/10.5817/CP2016-4-7]

-

Hopstaken, J. F., van der Linden, D., Bakker, A. B., & Kompier, M. A. (2015). A multifaceted investigation of the link between mental fatigue and task disengagement. Psychophysiology, 52(3), 305–315.

[https://doi.org/10.1111/psyp.12339]

- Hunter, T. (2022, March 7). Needy, overconfident voice assistants are wearing on their owners’ last nerves. The Washington Post. https://www.washingtonpost.com/ technology/2022/03/07/voice-assistants-wrong-answers/

-

Ignatius, E., & Kokkonen, M. (2007). Factors contributing to verbal self-disclosure. Nordic Psychology, 59(4), 362–391.

[https://doi.org/10.1027/1901-2276.59.4.362]

- Kang, H. M. (2019, July 10). AI speaker ‘emotional conversation’ services popular among solitary senior citizens. The Korea Bizwire. http://koreabizwire.com/ai-speaker-emotional-conversation-services-popular-among-solitary-senior-citizens/140435

-

Katz, J. E. (2024). Interesting aspects of theories for media and communication studies: Opportunities as reflected in an old hidden gem. Asian Communication Research, 21(1), 52–58.

[https://doi.org/10.20879/acr.2024.21.001]

- Kennedy, J. S. (1992). The new anthropomorphism. Cambridge University Press.

-

Ki, C.-W., Cho, E., & Lee, J.-E. (2020). Can an Intelligent Personal Assistant (IPA) be your friend? Para-friendship development mechanism between IPAs and their users. Computers in Human Behavior, 111, 106412.

[https://doi.org/10.1016/j.chb.2020.106412]

-

Kim, J., & Im, I. (2023). Anthropomorphic response: Understanding interactions between humans and artificial intelligence agents. Computers in Human Behavior, 139, 107512.

[https://doi.org/10.1016/j.chb.2022.107512]

-

Kokolakis, S. (2017). Privacy attitudes and privacy behaviour: A review of current research on the privacy paradox phenomenon. Computers and Security, 64, 122–134.

[https://doi.org/10.1016/j.cose.2015.07.002]

- Krämer, N. C., von der Pütten, A., & Eimler, S. (2012). Human-agent and human-robot interaction theory: Similarities to and differences from human-human interaction. In M. Zacarias & J. V. de Oliveira (Eds.), Human-computer interaction: The agency perspective (pp. 215–240). Springer-Verlag.

-

Lankton, N. K., McKnight, D. H., & Tripp, J. (2015). Technology, humanness, and trust: Rethinking trust in technology. Journal of the Association for Information Systems, 16(10), 880–918.

[https://doi.org/10.17705/1jais.00411]

- Lau, J., Zimmerman, B., & Schaub, F. (2018). Alexa, are you listening?: Privacy perceptions, concerns and privacy-seeking behaviors with smart speakers. Proceedings of the ACM on Human-Computer Interaction, 2(CSCW), 102.

-

Laurenceau, J. P., Barrett, L. F., & Pietromonaco, P. R. (1998). Intimacy as an interpersonal process: The importance of self-disclosure, partner disclosure, and perceived partner responsiveness in interpersonal exchanges. Journal of Personality and Social Psychology, 74(5), 1238–1315.

[https://doi.org/10.1037/0022-3514.74.5.1238]

-

Lee, N., & Kim, J.-H. (2019). Emotion vs. function: The effects of AI speaker’s recognition on product attitude and continuance intention. Journal of Digital Contents Society, 20(12), 2535–2543.

[https://doi.org/10.9728/dcs.2019.20.12.2535]

-

Li, H., Zhang, R., Lee, Y. C., Kraut, R. E., & Mohr, D. C. (2023). Systematic review and meta-analysis of AI-based conversational agents for promoting mental health and well-being. NPJ Digital Medicine, 6(1), 236.

[https://doi.org/10.1038/s41746-023-00979-5]

-

Li, J., Chen, C., Azghadi, M. R., Ghodosi, H., Pan, L., & Zhang, J. (2023). Security and privacy problems in voice assistant applications: A survey. Computers & Security, 134, 103448.

[https://doi.org/10.1016/j.cose.2023.103448]

- Liao, Y., Vitak, J., Kumar, P., Zimmer, M., & Kritikos, K. (2019). Understanding the role of privacy and trust in intelligent personal assistant adoption. In N. G. Taylor, C. Christian-Lamb, M. H. Martin, & B. Nardi (Eds.), Information in contemporary society: 14th International Conference, iConference 2019 (pp. 102–113). Springer.

-

Lopatovska, I., Rink, K., Knight, I., Raines, K., Cosenza, K., Williams, H., & Martinez, A. (2019). Talk to me: Exploring user interactions with the Amazon Alexa. Journal of Librarianship and Information Science, 51(4), 984–997.

[https://doi.org/10.1177/0961000618759414]

-

Lucas, G. M., Gratch, J., King, A., & Morency, L. P. (2014). It’s only a computer: Virtual humans increase willingness to disclose. Computers in Human Behavior, 37, 94–100.

[https://doi.org/10.1016/j.chb.2014.04.043]

-

Lutz, C., & Newlands, G. (2021). Privacy and smart speakers: A multi-dimensional approach. The Information Society, 37(3), 147–162.

[https://doi.org/10.1080/01972243.2021.1897914]

- Lutz, C., & Tamó-Larrieux, A . (2018). Communicating with robots: ANTalyzing the interaction between healthcare robots and humans with regards to privacy. In A. Guzman (Ed.), Human-machine communication: Rethinking communication, technology, and ourselves (pp. 145–165). Peter Lang.

-

Lutz, C., & Tamó-Larrieux, A. (2020). The robot privacy paradox: Understanding how privacy concerns shape intentions to use social robots. Human-Machine Communication, 1, 87–111.

[https://doi.org/10.30658/hmc.1.6]

-

Lutz, C., Hoffmann, C. P., & Ranzini, G. (2020). Data capitalism and the user: An exploration of privacy cynicism in Germany. New Media & Society, 22(7), 1168–1187.

[https://doi.org/10.1177/1461444820912544]

-

Madhavan, P., Wiegmann, D. A., & Lacson, F. C. (2006). Automation failures on tasks easily performed by operators undermine trust in automated aids. Human Factors, 48(2), 2412–2456.

[https://doi.org/10.1518/001872006777724408]

-

Malhotra, N. K., Kim, S. S., & Agarwal, J. (2004). Internet Users’ Information Privacy Concerns (IUIPC): The construct, the scale, and a causal model. Information Systems Research, 15(4), 336–355.

[https://doi.org/10.1287/isre.1040.0032]

- Manheim, K., & Kaplan, L. (2019). Artificial intelligence: Risks to privacy and democracy. The Yale Journal of Law & Technology, 21, 106–188.

-

Meng, J., & Dai, Y. (2021). Emotional support from AI chatbots: Should a supportive partner self-disclose or not? Journal of Computer- Mediated Communication, 26(4), 207–222.

[https://doi.org/10.1093/jcmc/zmab005]

-

Nass, C., & Moon, Y. (2000). Machines and mindlessness: Social responses to computers. Journal of Social Issues, 56(1), 81–103.

[https://doi.org/10.1111/0022-4537.00153]

-

Nass, C., Steuer. J., & Tauber, E. R. (1994). Computers are social actors. CHI ’94: Proceedings of the SIGCHI Conference on Human factors in computing systems (pp. 72–78). ACM.

[https://doi.org/10.1145/259963.260288]

-

Norberg, P. A., Horne, D. R., & Horne, D. A. (2007). The privacy paradox: Personal information disclosure intentions versus behaviors. Journal of Consumer Affairs, 41(1), 100–126.

[https://doi.org/10.1111/j.1745-6606.2006.00070.x]

-

Nowak, K. L., & Biocca, F. (2003). The effect of the agency and anthropomorphism on users’ sense of telepresence, copresence, and social presence in virtual environments. Presence: Teleoperators & Virtual Environments, 12(5), 481–494.

[https://doi.org/10.1162/105474603322761289]

- Pardes, A. (2018, January 31). The emotional chatbots are here to probe our feeling. WIRED. https://www.wired.com/story/replika-open-source/

-

Patterson, M. L. (1982). A sequential functional model of nonverbal exchange. Psychological Review, 89(3), 231–249.

[https://doi.org/10.1037/0033-295X.89.3.231]

-

Pennebaker, J. W. (1997). Writing about emotional experiences as a therapeutic process. Psychological Science, 8(3), 162–166.

[https://doi.org/10.1111/j.1467-9280.1997.tb00403.x]

-

Peter, J., & Kühne, R. (2018). The new frontier in communication research: Why we should study social robots. Media and Communication, 6(3), 73–76.

[https://doi.org/10.17645/mac.v6i3.1596]

- Pradhan, A., Findlater, L., & Lazar, A. (2019). “Phantom friend” or “just a box with information” personification and ontological categorization of smart agent-based voice assistants by older adults. Proceedings of the ACM on Human-Computer Interaction, 3(CSCW), 214.

-

Purington, A., Taft, J. G., Sannon, S., Bazarova, N. N., & Taylor, S. H. (2017). “Alexa is my new BFF”: Social roles, user satisfaction, and personification of the Amazon Echo. CHI EA ’17: Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems (pp. 2853–2859). ACM.

[https://doi.org/10.1145/3027063.3053246]

- Ramadan, T., Hikmah, I., Putri, N. S., & Indriyanti, P. (2022). Language styles analysis utilized by Nadiem Makarim at the Singapore Summit 2020. Journal of English Language and Language Teaching, 6(2), 1–10.

- Reeves, B., & Nass, C. (1996). The media equation: How people treat computers, television, and new media like real people. Cambridge University Press.

-

Reis, H. T., Lemay, E. P., Jr., & Finkenauer, C. (2017). Toward understanding understanding: The importance of feeling understood in relationships. Social and Personality Psychology Compass, 11(3), e12308.

[https://doi.org/10.1111/spc3.12308]

- Short, J., Williams, E., & Christie, B. (1976). The social psychology of telecommunications. Wiley.

- Snell, W. E., Miller, R. S., & Belk, S. S. (1988). Development of the emotional self-disclosure scale. Sex Roles, 18(1–2), 59–73.

-

Sprecher, S., & Hendrick, S. S. (2004). Self-disclosure in intimate relationships: Associations with individual and relationship characteristics over time. Journal of Social and Clinical Psychology, 23(6), 857–877.

[https://doi.org/10.1521/jscp.23.6.857.54803]

-

Sundar, S. S. (2020). Rise of machine agency: A framework for studying the psychology of Human-AI Interaction (HAII). Journal of Computer-Mediated Communication, 25(1), 74–88.

[https://doi.org/10.1093/jcmc/zmz026]

-

Sundar, S. S., & Kim, J. (2019). Machine heuristic: When we trust computers more than humans with our personal information. CHI ’19: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Paper No. 538). ACM.

[https://doi.org/10.1145/3290605.3300768]

-

Sundar, S. S., & Nass, C. (2000). Source orientation in human-computer interaction: Programmer, networker, or independent social actor. Communication Research, 27(6), 683–703.

[https://doi.org/10.1177/009365000027006001]

-

Sundar, S. S., Bellur, S., & Lee, H. M. (2024). Concept explication: At the core of it all. Asian Communication Research, 21(1), 10–18.

[https://doi.org/10.20879/acr.2024.21.010]

-

Taddicken, M. (2014). The ‘privacy paradox’ in the social web: The impact of privacy concerns, individual characteristics, and the perceived social relevance on different forms of self-disclosure. Journal of Computer-Mediated Communication, 19(2), 248–273.

[https://doi.org/10.1111/jcc4.12052]

-

Tsay-Vogel, M., Shanahan, J., & Signorielli, N. (2018). Social media cultivating perceptions of privacy: A 5-year analysis of privacy attitudes and self-disclosure behaviors among Facebook users. New Media and Society, 20(1), 141–161.

[https://doi.org/10.1177/1461444816660731]

- Yancey, M. (2022, November 2). 50% Of U.S. consumers use voice search daily. UpCity. https://upcity.com/experts/consumers-and-voice-search-study/